The Fundamentals of Machine Learning

Audience: high-schoolundergraduate

Tags: neural-networksmachine-learninggradient-descent

The last few years have brought us incredible advancements in machine learning. These diverse systems are built from a few core building blocks, which are modified and combined in complex ways. These core ideas often get abstracted away when discussing the bigger picture, which leaves us to wonder — “What’s going on inside?” In this interactive lesson, we will answer that question by thinking small. We will start with the simplest of models — The Line, and see how it can help us build a Neural Network capable of learning anything. Then, going back to the line, we will learn how to train it using the Gradient Descent algorithm, which we will write from scratch in Python. Audience: learners who have a base in calculus (differentiation) and some prior programming experience, who wish to learn machine learning practically.

Analytics

Comments

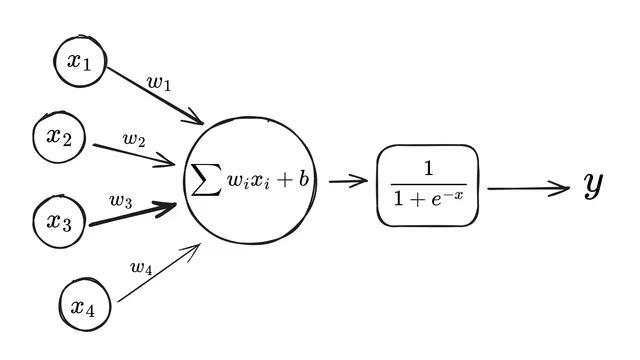

The article is well written and paced. Concepts are introduced from first principles and the interactive plots contribute significantly to the experience. For the most part however, the article is about mathematical modelling and has very little relating it directly to machine learning other than the brief neural networks interlude. It feels as though the sigmoids are detached from the rest of the article and the neural networks section as a whole could do with more development and integration. Treating the article as an explainer on gradient descent (which is obviously an important method in machine learning) it is fantastic, but the title and initial aims may be overambitious as the real life learning aspect of the functions is only briefly covered.

interesting

I think it could’ve taken a new spin / perspective / medium as this intro to ML structure is seen many places already.

If I lose to you, I won’t be upset. This is a fantastic progressive learning example on a modern topic with interactive examples that make sense. The math quickly goes beyond what I’m practiced in, but I love seeing how the activation functions change with multiple weights in a two dimensional graph. I’ve not seen that yet. No notes. keep it up.

Thanks for the tutorial! I once digged in deeper into linear regression modelling via the linear least square method, so this helped me a lot to further understand classic ML algorithms. I also like the code boxes and adjustable graphs! In general I think it is a good read overall. = > Some possible improvements: Instead of “The line” maybe something like “we start with the line as a model, also called a linear model”. Instead of “The linear model is… well, linear.” Maybe “The line is, well, linear – a linear model”…. Then maybe a short example of a linear relation, which you provide in chapter “fitting a model to data”. Maybe it is just me, but getting an overview of what is ahead can help to oversee the effort of reading maybe, some orientation etc. Concerning the logistic function: Maybe some more explanation on how b now became a threshold and the weights a parameter for uncertainty. The next chapter makes it more graspable, so maybe something like “we will explain this in the next chapter” can help to keep people hooked. The language of the second half felt more confident, so I guess the only thing that I think can be improved is aspects such as intuition, everyday examples or conceptuality at the beginning, even for people that are not absolute beginners or so. Another thing would be the history, maybe the McCulloch Pitts and that it is a concept from 1940s - which I always find super fascinating, but not completely necessary. …

Great interactivity - the overall narrative could be a bit more structured, as well as the beginning motivation. Some of the diagrams were starting to feel a bit repetitive towards the end.

Thank you for this very nice introduction to ML !